- NVIDIA Rubin GPU targets long-context AI, from million-token coding to video.

- Packs 30 petaflops, 128GB memory, and 3x faster attention.

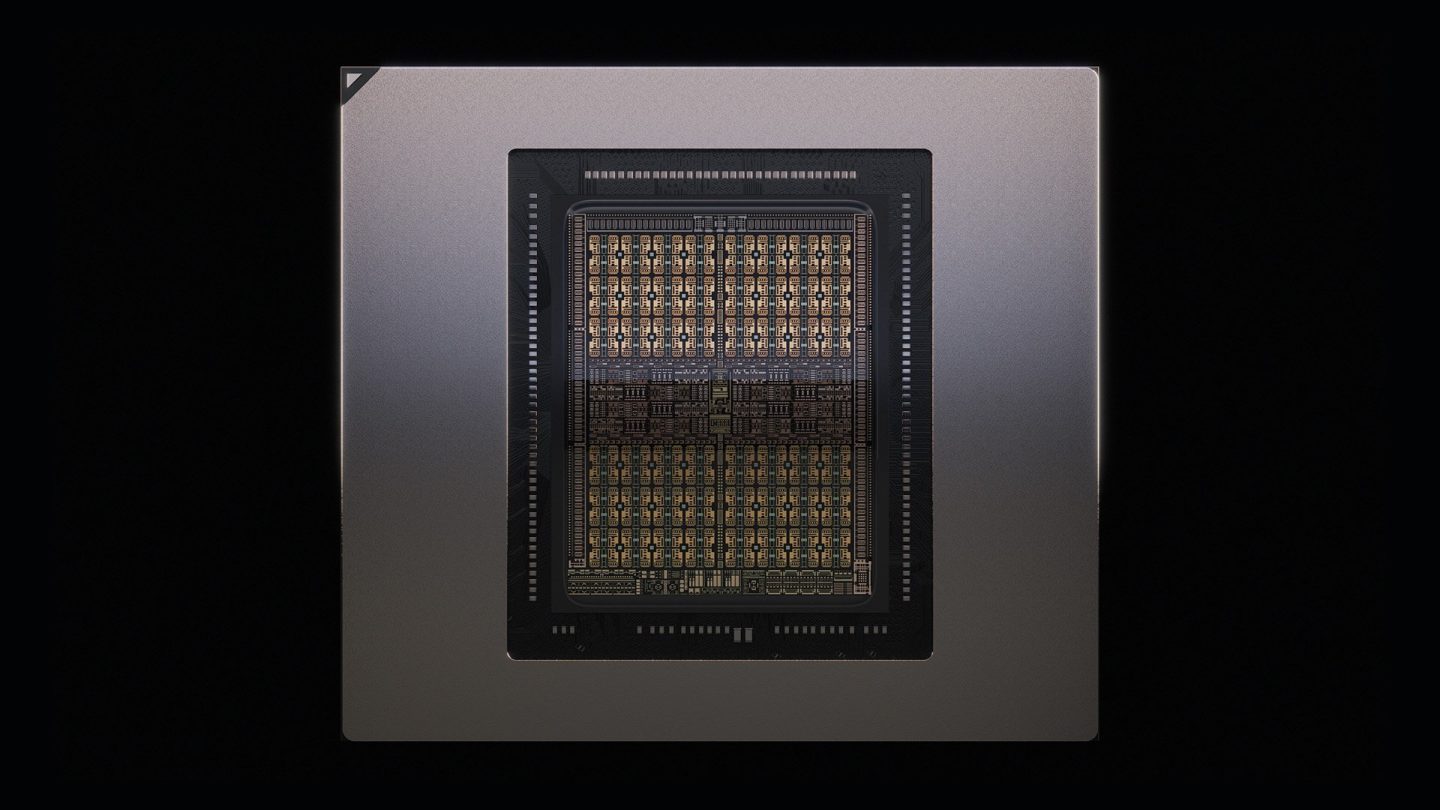

NVIDIA has introduced the Rubin CPX, a new type of GPU designed for long-context AI processing. The chip is built to handle workloads that require models to process millions of tokens at once – whether that’s generating code in entire software projects or working with video content an hour in length.

Rubin CPX works alongside NVIDIA’s Vera CPUs and Rubin GPUs inside the Vera Rubin NVL144 CPX platform. A single rack delivers 8 exaflops of AI compute, with 7.5 times the performance of NVIDIA’s GB300 NVL72 system, along with 100 terabytes of fast memory and bandwidth of 1.7 petabytes per second. Customers can also adopt Rubin CPX in other system configurations, including options that reuse existing infrastructure.

Jensen Huang, NVIDIA’s founder and CEO, described the launch as a turning point: “The Vera Rubin platform will mark another leap in the frontier of AI computing – introducing both the next-generation Rubin GPU and a new category of processors called CPX. Just as RTX revolutionised graphics and physical AI, Rubin CPX is the first CUDA GPU purpose-built for massive-context AI, where models reason in millions of tokens of knowledge at once.”

From coding to video generation

The demand for long-context processing is growing fast. Today’s AI coding assistants are limited to smaller code blocks, but Rubin CPX is designed to manage far larger projects, with the ability to scan and optimise entire repositories. In video, where an hour of content can take up to one million tokens, Rubin CPX combines video encoding, decoding, and inference processing in a single chip, making tasks like video search and generative editing more practical.

The GPU uses a monolithic die design based on the Rubin architecture, packed with NVFP4 compute resources for high efficiency. It delivers up to 30 petaflops of compute with NVFP4 precision and comes with 128GB of GDDR7 memory. Compared to NVIDIA’s GB300 NVL72 system, it provides triple the speed for attention mechanisms, helping models handle longer sequences without slowing down.

Rubin CPX can be deployed with NVIDIA’s InfiniBand fabric or Spectrum-X Ethernet networking for scale-out computing. In the flagship NVL144 CPX system, NVIDIA says customers could generate $5 billion in token revenue for every $100 million invested.

Beyond Rubin CPX itself, NVIDIA also highlighted results from the latest MLPerf Inference benchmarks. Blackwell Ultra set records on new reasoning benchmarks like DeepSeek R1 and Llama 3.1 405B, with NVIDIA being the only platform to submit results for the most demanding interactive scenarios.

The company also set records on the new Llama 3.8B tests, Whisper speech-to-text, and graph neural networks. NVIDIA credited these wins partly to a technique called disaggregated serving, which separates the compute-heavy context phase of inference from the bandwidth-heavy generation phase. By optimising them independently, throughput per GPU rose by nearly 50%.

NVIDIA tied these results directly to AI economics. For example, a free GPU with a quarter of Blackwell’s performance could generate about $8 million in token revenue over three years, while a $3 million investment in GV200 infrastructure could generate around $30 million. The company framed performance as the key lever for AI factory ROI.

Rubin CPX is positioned as the first of a new class of “context GPUs” in NVIDIA’s roadmap. The company is also advancing the Rubin Ultra GPU, Vera CPUs, NVLink Switch, Spectrum-X Ethernet, and CX9 SuperNICs – all designed to work together in a one-year upgrade cycle. The roadmap emphasises NVIDIA’s push for full-stack solutions, not just standalone chips.

Industry adoption

Several AI companies are preparing to use Rubin CPX. The company behind Cursor, the AI-powered code editor, expects the GPU to support faster code generation and developer collaboration. “With NVIDIA Rubin CPX, Cursor will be able to deliver lightning-fast code generation and developer insights, transforming software creation,” said Michael Truell, CEO of Cursor.

Runway, a generative AI company focused on video, sees Rubin CPX as central to its work on creative workflows. “Video generation is rapidly advancing toward longer context and more flexible, agent-driven creative workflows,” said Cristóbal Valenzuela, CEO of Runway. “We see Rubin CPX as a major leap in performance, supporting these demanding workloads to build more general, intelligent creative tools.”

Magic, an AI company building foundation models for software agents, highlighted the ability to process larger contexts. “With a 100-million-token context window, our models can see a codebase, years of interaction history, documentation and libraries in context without fine-tuning,” said Eric Steinberger, CEO of Magic.

Supported by NVIDIA’s AI stack

Rubin CPX is integrated into NVIDIA’s broader AI ecosystem, including its software stack and developer tools. The GPU will support the company’s Nemotron multimodal models, delivered through NVIDIA AI Enterprise. It also connects with the Dynamo platform, which helps improve inference efficiency and reduce costs for production AI.

Backed by CUDA-X libraries and NVIDIA’s community of millions of developers, Rubin CPX extends the company’s push into large-scale AI, offering new tools for industries where context size and performance define what’s possible.

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is part of TechEx and is co-located with other leading technology events, click here for more information.

AI News is powered by TechForge Media. Explore other upcoming enterprise technology events and webinars here.